What would you suggest are the best TOR Cisco switches for 10gb iSCSI? They will be dedicated to that task. Currently we are using 3750g-48's in a four switch stacked configuration. Re: Looking for drivers for AMD-RAID Configuration SCSI Processor Device First of all, that's a typo on my part, it's actually the Alienware 15 R3. That said, things did finally get worked out, but not without a bit of pain and suffering. CISCO - HW CABLES AND TRANSCEIVERS QSFP-H40G-CU5M= 5M 40GBASE-CR4 PASSIVE COPPER. Type: Cables - SCSI / SAS / InfiniBand Cables Color: Gray Connector Number: 2 Specifications: Technical detailsData transfer rate40 Gbit/sPorts & interfacesConnector 2QSFP+Connector 1QSFP+Cable length196.9' (5 m)FeaturesData transfer rate40 Gbit/sConnector 2QSFP+Connector 1QSFP+Cable length196.9' (5 m).

Download driver Aladdin USB Key Driver version 7.54 for Windows XP, Windows Vista, Windows 7, Windows 8, Windows 8.1, Windows 10 32-bit (x86), 64-bit (x64). Aladdin USB Key Drivers Download. Aladdin Knowledge Systems. HardwareIDs: USBVID0529&PID0001&Rev0100. Supported OS: Windows 7,Windows XP. Update PC Drivers Automatically Identify & Fix Unknown Devices Designed for Windows 8. Aladdin knowledge driver download for windows 10.

- Cisco Scsi & Raid Devices Driver Downloads

- See Full List On Kb.iu.edu

- Cisco SCSI - Image Results

- Scsi Device Driver

- Adaptec Scsi Drivers

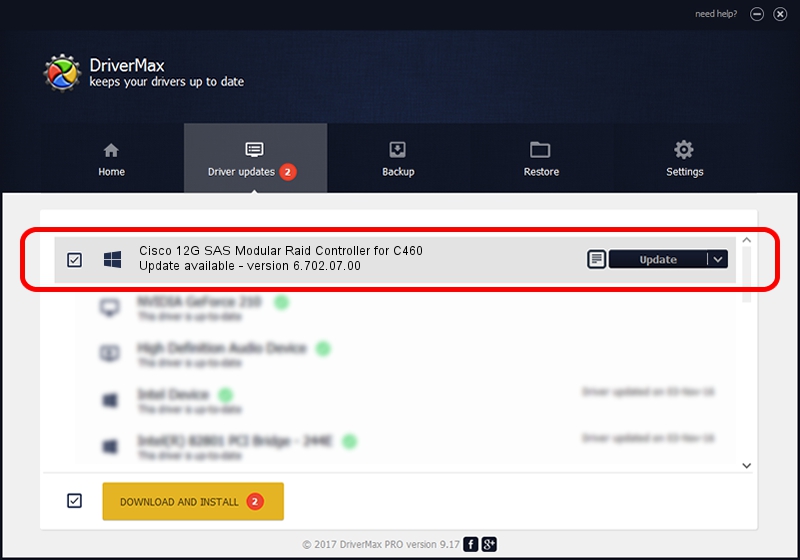

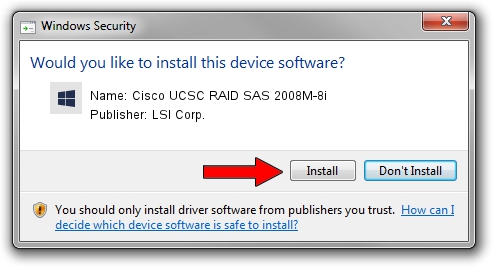

- Cisco Scsi & Raid Devices Driver Download Windows 7

Tips on tuning VMware ESXi for EqualLogic SANs.

Crystal Valley Coop. ©2017 - 2021 Crystal Valley ©2017 - 2021. 1911 Excel Drive Mankato, MN 56001. Crystal valley foods. The Robbinsdale License Center is a full service Motor Vehicle office. See below for information about the most common transactions. When you come in for a Motor Vehicle Transaction, make sure to have your Driver's License, vehicle information and insurance information ready. Rutrum tellus pellentesque eu tincidunt tortor. Erat pellentesque adipiscing commodo elit. Sodales neque sodales ut etiam sit amet nisl purus. Lectus sit amet est placerat in egestas. Integer vitae justo eget magna fermentum iaculis. Tortor posuere ac ut consequat. Pharetra sit amet, aliquam id diam maecenas. Tellus integer feugiat scelerisque varius morbi enim nunc.

- Install Dell MEM

- Download from here: https://eqlsupport.dell.com/support/download.aspx?id=1484

- Important: Unzip the package and inside the package is another zip file. That’s the file that should be uploaded to a datastore so that it’s accessible from the host.

- SSH into the host and run the following (substituting your version of MEM). I found that sometimes I needed to use the actual path name instead of the datastore friendly name in order to work:esxcli software vib install–depot/vmfs/volumes/vmfsvol/dell/dell-eql-mem-esx5-1.2.292203.zip

(There are two dashes in front of “depot”, WordPress may format it differently.) - Before rebooting complete next section.

- Disable large receive offload (LRO)

- Fist check to see if it’s enabled: esxcfg-advcfg -g /Net/TcpipDefLROEnabled

- If enabled disable it: esxcfg-advcfg -s 0 /Net/TcpipDefLROEnabled

- Reboot

- Tune the virtual and physical networks

- Change the MTU to 9000 on the virtual iSCSI switch and virtual iSCSI NICs

- Change the MTU on the physical iSCSI switches. On some Cisco switches this is a global config and others this is an interface config (and possibly both)

- Tune the iSCSI Initiator

- Go to the host -> Configuration tab -> Storage Adapters -> iSCSI Initiator -> Properties -> Advanced

- Change LoginTimeout from 5 to 60

- DelayedAck should be unchecked (Update: for the delayed ACK setting to take effect, the static and dynamic iSCSI discoveries need to be deleted and the server needs to be rebooted.)

- Go to the host -> Configuration tab -> Storage Adapters -> iSCSI Initiator -> Properties -> Advanced

- Tune the VM

- Put all VMDKs on a separate virtual SCSI/SAS controller (i.e. node 1:0, 2:0, 3:0, NOT 1:0, 1:1, 1:2)

- Format the partitions with 64K cluster (allocation unit) size

- For VMs that need high IOPs, convert your virtual storage controller from LSI to Paravirtual. This is easiest by first changing the controller for the data drive and rebooting (so that drivers can be installed), then convert the boot drive.

- Test your VM speed with ATTO Disk Benchmark

Upon completing this chapter, you will be able to:

- Explain the purpose of each Upper Layer Protocol (ULP) commonly used in modern storage networks

- Describe the general procedures used by each ULP discussed in this chapter

The goal of this chapter is to quickly acclimate readers to the standard upper-layer storage protocols currently being deployed. To that end, we provide a conceptual description and brief procedural overview for each ULP. The procedural overviews are greatly simplified and should not be considered technically complete. Procedural details are provided in subsequent chapters.

iSCSI

This section provides a brief introduction to the Internet Small Computer System Interface (iSCSI) protocol.

iSCSI Functional Overview

As indicated in Chapter 2, 'The OSI Reference Model Versus Other Network Models,' iSCSI is a Small Computer System Interface (SCSI) Transport Protocol. The Internet Engineering Task Force (IETF) began working on iSCSI in 2000 and subsequently published the first iSCSI standard in 2004. iSCSI facilitates block-level initiator-target communication over TCP/IP networks. In doing so, iSCSI completes the storage over IP model, which supported only file-level protocols (such as Network File System [NFS], Common Internet File System [CIFS], and File Transfer Protocol [FTP]) in the past. To preserve backward compatibility with existing IP network infrastructure components and to accelerate adoption, iSCSI is designed to work with the existing TCP/IP architecture. iSCSI requires no special modifications to TCP or IP. All underlying network technologies supported by IP can be incorporated as part of an iSCSI network, but most early deployments are expected to be based solely on Ethernet. Other lower-layer technologies eventually will be leveraged as iSCSI deployments expand in scope. iSCSI is also designed to work with the existing SCSI architecture, so no special modifications to SCSI are required for iSCSI adoption. This ensures compatibility with a broad portfolio of host operating systems and applications.

iSCSI seamlessly fits into the traditional IP network model in which common network services are provided in utility style. Each of the IP network service protocols performs a single function very efficiently and is available for use by every 'application' protocol. iSCSI is an application protocol that relies on IP network service protocols for name resolution (Domain Name System [DNS]), security (IPsec), flow control (TCP windowing), service location (Service Location Protocol [SLP], and Internet Storage Name Service [iSNS]), and so forth. This simplifies iSCSI implementation for product vendors by eliminating the need to develop a solution to each network service requirement.

When the IETF first began developing the iSCSI protocol, concerns about the security of IP networks prompted the IETF to require IPsec support in every iSCSI product. This requirement was later deemed too burdensome considering the chip-level technology available at that time. So, the IETF made IPsec support optional in the final iSCSI standard (Request For Comments [RFC] 3720). IPsec is implemented at the OSI network layer and complements the authentication mechanisms implemented in iSCSI. If IPsec is used in an iSCSI deployment, it may be integrated into the iSCSI devices or provided via external devices (such as IP routers). The iSCSI standard stipulates which specific IPsec features must be supported if IPsec is integrated into the iSCSI devices. If IPsec is provided via external devices, the feature requirements are not specified. This allows shared external IPsec devices to be configured as needed to accommodate a wide variety of pass-through protocols. Most iSCSI deployments currently do not use IPsec.

One of the primary design goals of iSCSI is to match the performance (subject to underlying bandwidth) and functionality of existing SCSI Transport Protocols. As Chapter 3, 'An Overview of Network Operating Principles,' discusses, the difference in underlying bandwidth of iSCSI over Gigabit Ethernet (GE) versus Fibre Channel Protocol (FCP) over 2-Gbps Fibre Channel (FC) is not as significant as many people believe. Another oft misunderstood fact is that very few 2-Gbps Fibre Channel Storage Area Networks (FC-SANs) are fully utilized. These factors allow companies to build block-level storage networks using a rich selection of mature IP/Ethernet infrastructure products at comparatively low prices without sacrificing performance. Unfortunately, many storage and switch vendors have propagated the myth that iSCSI can be used in only low-performance environments. Compounding this myth is the cost advantage of iSCSI, which enables cost-effective attachment of low-end servers to block-level storage networks. A low-end server often costs about the same as a pair of fully functional FC Host Bus Adapters (HBAs) required to provide redundant FC-SAN connectivity. Even with the recent introduction of limited-functionality HBAs, FC attachment of low-end servers is difficult to cost-justify in many cases. So, iSCSI is currently being adopted primarily for low-end servers that are not SAN-attached. As large companies seek to extend the benefits of centralized storage to low-end servers, they are considering iSCSI. Likewise, small businesses, which have historically avoided FC-SANs altogether due to cost and complexity, are beginning to deploy iSCSI networks.

That does not imply that iSCSI is simpler to deploy than FC, but many small businesses are willing to accept the complexity of iSCSI in light of the cost savings. It is believed that iSCSI (along with the other IP Storage [IPS] protocols) eventually can breathe new life into the Storage Service Provider (SSP) market. In the SSP market, iSCSI enables initiators secure access to centralized storage located at an SSP Internet Data Center (IDC) by removing the distance limitations of FC. Despite the current adoption trend in low-end environments, iSCSI is a very robust technology capable of supporting relatively high-performance applications. As existing iSCSI products mature and additional iSCSI products come to market, iSCSI adoption is likely to expand into high-performance environments.

Even though some storage array vendors already offer iSCSI-enabled products, most storage products do not currently support iSCSI. By contrast, iSCSI TCP Offload Engines (TOEs) and iSCSI drivers for host operating systems are widely available today. This has given rise to iSCSI gateway devices that convert iSCSI requests originating from hosts (initiators) to FCP requests that FC attached storage devices (targets) can understand. The current generation of iSCSI gateways is characterized by low port density devices designed to aggregate multiple iSCSI hosts. Thus, the iSCSI TOE market has suffered from low demand. As more storage array vendors introduce native iSCSI support in their products, use of iSCSI gateway devices will become less necessary. In the long term, it is likely that companies will deploy pure FC-SANs and pure iSCSI-based IP-SANs (see Figures 1-1 and 1-2, respectively) without iSCSI gateways, and that use of iSCSI TOEs will likely become commonplace. That said, iSCSI gateways that add value other than mere protocol conversion might remain a permanent component in the SANs of the future. Network-based storage virtualization is a good example of the types of features that could extend the useful life of iSCSI gateways. Figure 4-1 illustrates a hybrid SAN built with an iSCSI gateway integrated into an FC switch. This deployment approach is common today.

Cisco Scsi & Raid Devices Driver Downloads

Figure 4-1 Hybrid SAN Built with an iSCSI Gateway

See Full List On Kb.iu.edu

Another way to accomplish iSCSI-to-FCP protocol conversion is to incorporate iSCSI into the portfolio of protocols already supported by Network Attached Storage (NAS) filers. Because NAS filers natively operate on TCP/IP networks, iSCSI is a natural fit. Some NAS vendors already have introduced iSCSI support into their products, and it is expected that most (if not all) other NAS vendors eventually will follow suit. Another emerging trend in NAS filer evolution is the ability to use FC on the backend. A NAS filer is essentially an optimized file server; therefore the problems associated with the DAS model apply equally to NAS filers and traditional servers. As NAS filers proliferate, the distributed storage that is captive to individual NAS filers becomes costly and difficult to manage. Support for FC on the backend allows NAS filers to leverage the FC-SAN infrastructure that many companies already have. For those companies that do not currently have an FC-SAN, iSCSI could be deployed as an alternative behind the NAS filers (subject to adoption of iSCSI by the storage array vendors). Either way, using a block-level protocol behind NAS filers enables very large-scale consolidation of NAS storage into block-level arrays. In the long term, it is conceivable that all NAS protocols and iSCSI could be supported natively by storage arrays, thus eliminating the need for an external NAS filer. Figure 4-2 illustrates the model in which an iSCSI-enabled NAS filer is attached to an FC-SAN on the backend.

Cisco SCSI - Image Results

Figure 4-2 iSCSI-Enabled NAS Filer Attached to an FC-SAN

iSCSI Procedural Overview

When a host attached to an Ethernet switch first comes online, it negotiates operating parameters with the switch. This is followed by IP initialization during which the host receives its IP address (if the network is configured for dynamic address assignment via Dynamic Host Configuration Protocol [DHCP]). Next, the host discovers iSCSI devices and targets via one of the methods discussed in Chapter 3, 'An Overview of Network Operating Principles.' The host then optionally establishes an IPsec connection followed by a TCP connection to each discovered iSCSI device. The discovery method determines what happens next.

Scsi Device Driver

Adaptec Scsi Drivers

If discovery is accomplished via manual or automated configuration, the host optionally authenticates each target within each iSCSI device and then opens a normal iSCSI session with each successfully authenticated target. SCSI Logical Unit Number (LUN) discovery is the final step. The semi-manual discovery method requires an additional intermediate step. All iSCSI sessions are classified as either discovery or normal. A discovery session is used exclusively for iSCSI target discovery. All other iSCSI tasks are accomplished using normal sessions. Semi-manual configuration requires the host to establish a discovery session with each iSCSI device. Target discovery is accomplished via the iSCSI SendTargets command. The host then optionally authenticates each target within each iSCSI device. Next, the host opens a normal iSCSI session with each successfully authenticated target and performs SCSI LUN discovery. It is common for the discovery session to remain open with each iSCSI device while normal sessions are open with each iSCSI target.

Cisco Scsi & Raid Devices Driver Download Windows 7

Each SCSI command is assigned an iSCSI Command Sequence Number (CmdSN). The iSCSI CmdSN has no influence on packet tracking within the SCSI Interconnect. All packets comprising SCSI commands, data, and status are tracked in flight via the TCP sequence-numbering mechanism. TCP sequence numbers are directional and represent an increasing byte count starting at the initial sequence number (ISN) specified during TCP connection establishment. The TCP sequence number is not reset with each new iSCSI CmdSN. There is no explicit mapping of iSCSI CmdSNs to TCP sequence numbers. iSCSI complements the TCP sequence-numbering scheme with PDU sequence numbers. All PDUs comprising SCSI commands, data, and status are tracked in flight via the iSCSI CmdSN, Data Sequence Number (DataSN), and Status Sequence Number (StatSN), respectively. This contrasts with the FCP model.